In 2030, 80% of all insurance policies will be sold in the metaverse, seamlessly transacted using distributed ledger technology, and claims will be assessed and paid automatically in seconds.

Will they? Probably not. Instead, in 2030, 80% of all policies and even claims will again be handled using pen and paper, due to increasing regulation. Is this our future? We hope not. But how will the future of technology in insurance look?

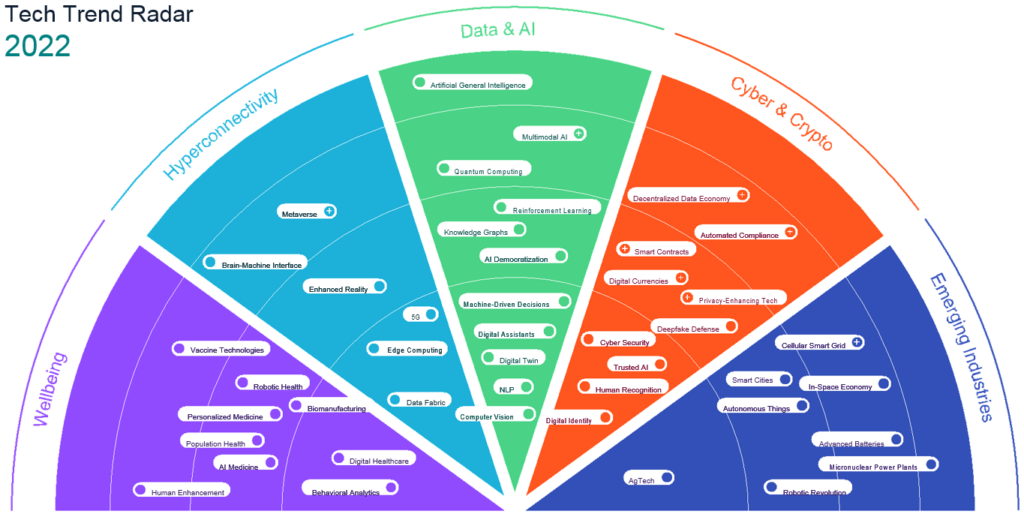

According Munich Re Tech Trend Radar, new technology is not a goal in itself. It needs to create additional value for customers and partners and must be reliable and trustworthy.

Corporate culture has to embrace and adopt the opportunities to stay competitive or even gain a competitive advantage.

Let’s start with evidence and grow our mindset to seize new opportunities:

1. Digital business models: Car manufacturers like Tesla, Daimler and Volvo embed insurance into their vehicle sales journey. Embedded insurance as one business model example is a reality in China and in the implementation stage in Europe for cars, travel, retail and more. Flexible API-based connections and fast product adoption are just two of the key ingredients that insurers must implement by new Technology trends by Insurance Sector.

2. Broker and agent-led businesses have been zoomed into the next engagement level – the pandemic has truly accelerated the change that has just begun. Besides communication platforms, we strongly recommend the adoption of underwriting solutions that have data augmentation and AI features like insurance-specific, natural-language processing (NLP).

3. Data and natural-language understanding and processing: At the heart of insurance, underwriters and claims handlers need to fully understand the submissions and loss data that brokers and insurers provide. One of the biggest technology levers for insurers is centered around NLU/NLP, as it promises to save insurers valuable time that can better be spent on edge cases. We see the advances in NLP, together with context-sensitive multimodal AI, as the ultimate key to further improving customer experiences and risk assessments, and to shortening the time taken to accept risks and pay valid claims.

4. Investments and ESG: Insurers are directly impacted by climate change and environmental damage. We see investments and risk solutions as a natural fit to combat the existing threats. Investments into carbon capture, energy storage and renewable energy solutions are a few examples.

Trend Radar – which obviously also explores AI medicine, NFT, blockchain and metaverse opportunities, as well as many others.

Technology impacts and Trend Evolution

The wellbeing trends primarily address insurers that offer health and life insurance products. It is key for them to understand that, with new technologies in digital health, telemedicine and even the biotech field, not only does the demand for new insurance solutions increase but also key functions like underwriting and risk management can be enhanced with new data streams and insights, e.g., with population health data.

…………………..

1. Metaverse

The trend field of Hyperconnectivity is all about network and infrastructure technologies. What is “hyper” in hyperconnectivity? We speak of an extended form of connectivity that links various systems, devices and entities, e.g., through data fabric infrastructure, and provides more bandwidth and speed like the 5G mobile phone network standard. Hyperconnectivity is the prerequisite for building digital infrastructures.

It also comprises technologies that bridge the gap between the physical and the digital world. The most vibrant trend in 2022 in this trend field is the so called “metaverse”, a fully virtual world which is accessible via virtual reality goggles.

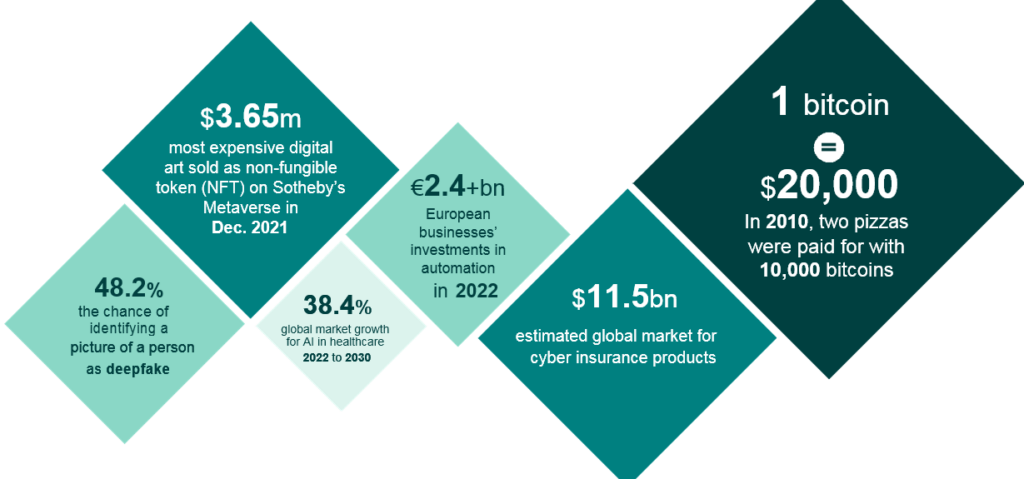

As of today, there is not just one metaverse but various of these virtual worlds hosted by tech companies like Facebook. Insurers should watch this evolution of virtual worlds closely.

The metaverse describes an immersive digital space that blends reality and virtuality through sophisticated augmented and virtual reality technology. The vision goes beyond the gaming worlds that have been known for years: Metaverse advocates foresee a multitude of interoperable platforms on which users can present as avatars, cooperate for business or leisure, own assets, and innovate.

Big technology companies like Meta and Microsoft are at the forefront of the movement and have high stakes in related technology, including virtual reality headsets and proprietary metaverse worlds. On the other hand, community-driven developments, often rooted in gaming, are building alternative metaverses that aim to grant governance rights to users.

2. Enhanced Reality

With the unforeseen increase in meetings being held in virtual set-ups due to the pandemic, now comes the need for enhancing communication technologies. The next generation of virtual meetings is real-time holographic communication, another move towards merging reality and virtuality. Holograms are not new but have lacked broad adoption to date.

In the future, physical and graphical objects will interact more and more naturally, creating a mixed reality.

For example, a person who looks at a product will see contextual information visually integrated or overlaid with that person’s view. Virtual and augmented worlds are often used as a novelty for customer engagement, e.g., using head-mounted displays (HMDs). By swiftly addressing growing customer concerns, the insurance industry has a potentially significant risk assessment and revenue opportunity.

3. Edge Computing

Edge computing processes data as close to its source as possible – at the edge of the network structure.

Transmitting large amounts of raw data over a network or into a cloud puts a tremendous load on network resources. In some cases, it is much more efficient to process data near its source and send only the data that has value over the network.

Edge application services significantly reduce the volumes of data that must be moved, thereby reducing transmission costs and improving speed. For example, an intelligent WiFi security camera using edge computing analytics might only transmit data when a certain percentage of pixels change between two images, indicating motion. The move towards edge computing is driven by mobile computing, the decreasing cost of computer components, and the sheer number of networked devices on the Internet of Things.

4. Brain-machine Interface

A brain-machine interface interprets the user’s brain patterns to control external software and hardware.

Brain-machine interfaces are already piloted as spelling/writing devices by people with paraplegia in order to communicate. Current projects aim to extend the scope of these devices to enable greater brain-robot interaction. These hybrid techniques combine brain, gaze and muscle tracking to offer hands-free interaction. Even though it is already possible to control virtual objects (the world’s first mind-controlled drone race was held in 2016), there are still major challenges.

The need to wear a headband or cap to interpret signals is a serious limitation in most consumer or business contexts. As a result, there is as yet no significant market for the use of these devices.

5. Data Fabric

Fabric-like data management infrastructure for distributed networks that unify and enable sharing of formerly disparate, inconsistent data.

Data fabrics are designed to enable applications and tools to access and transform different types of data, regardless of their storage location and supporting established data standards and systems.

Data fabrics create unified data environments in distributed computing networks – even among reinsurer, insurer, agent and third-party networks. They provide a solution to the ever-growing data quality problem which is a key challenge for the successful implementation of all data-enhanced technologies.

Data fabrics provide a standardization solution for data coming from multiple sources and being provided to various stakeholders in a platform of their choice.

6. Computer Vision

Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, as well as extracting data from the real world. For example, computer vision can help an

AI system, such as a robot, to navigate through an environment by providing information through vision sensors.

Computer vision is a combination of cameras, edge/cloud computing and specialized software, as well as AI, which enables computers to recognize (real-world) objects. The system is thus able to deduce from a couple of images what objects it is seeing. By means of deep learning, computer vision generates neuronal networks for processing and analyzing images.

Computer vision is a significant driver for applications requiring visual data from sensors, such as robots and drones, autonomous things and IoT devices.

Bricks-and-mortar stores can use computer vision to provide their customers with product recommendations during their shopping experience, based on the items already added to their shopping cart.

7. Virtual Digital Twin

A virtual counterpart of a real object that enables IT systems to interact with it rather than the real object directly.

Basically, a digital twin helps to improve maintenance, upgrades, repairs and operation of the actual object. For example, it could be a model of a sound system that enables a remote user to control the physical system with buttons on a mobile device.

Digital twins can also be used for product development, as they enable product testing and simulations without having to actually construct a physical object, thus driving innovation efficiency.

Even though the idea of the digital twin is in a constant state of development, strong development figures are expected. Hundreds of millions of things will most likely have digital twins in the future.

8. Machine-driven Decisions

Business decisions that are derived and backed by verifiable, quantitative data analysis.

A tremendous increase in data has contributed to the rise of a “data-driven” era, where big data analytics are used in every sector of the world economy.

The growing expansion of available data is a recognized trend worldwide, while valuable knowledge arising from the information comes from data analysis processes.

Today, algorithms scan every bit and piece of data that has been collected on a specific issue – such as the field of interests of a certain client – and extract all the relevant information. Conclusions are derived and logical decisions made based on this rich set of information. However, the success of data-driven decisions relies on the quality of the data gathered and the effectiveness of its analysis and interpretation.

9. Natural-language Processing

Natural-language processing (NLP) can ease human-computer interaction and leads to machines understanding and acting on text.

The quality of NLP has improved significantly; visible accomplishments include technologies such as Microsoft’s Skype Translator, which translates in real time from one spoken language to another, or Google’s information cards that offer answers instead of a list of page links.

For most enterprises, the simplest and most immediate use cases for NLP are typically related to improved customer service, employee support, and processing claims and policy information.

The introduction of the Generative Pre-trained Transformer 3 (GPT-3) by OpenAI in 2020 was a milestone for NLP technology. GPT-3 has capacity for 175 billion machine learning parameters and is able to generate texts of such high quality that evaluators have had difficulty distinguishing them from articles written by humans. Yet limitations remain. While the technology ranks high in syntactic capabilities (i.e., the ability to statistically associate words), it is weak as regards semantics and context.

10. Digital Assistants

Digital assistants can perform more intricate tasks and are increasingly moving towards becoming more human-like.

Progress made with behavioral analytics, machine learning and natural-language processing enables digital assistants to perform more complex tasks and evolve into more collaborative task agents. Their increasing complexity involves shifting from text-based to voice-based communication, as well as from answering straightforward questions or receiving simple commands to offering advice or anticipating the user’s actions.

Digital assistants don’t need to be asked explicitly by the user to perform a task. They can learn from user behavior and act on behalf of their user.

Today, as gadgets readily track and implement digital assistant capabilities, the field has grown ever more important. Especially in the banking and insurance sectors, where institutions have specifically vast amounts of financial data, digital assistants have great potential for semi-automating complex business processes such as claims management.

On the other hand, digital assistants are becoming more pervasive in households and other facilities. They can support in everyday tasks or the caretaking of elderly residents, for example.

11. AI Democratization

AI democratization refers to making AI technology available to the wider public.

Previously, AI was usable only by a select few data scientists, analysts and engineers. However, with the advent of features such as AutoML and Low Code.

No Code, the once mysterious world of AI is increasingly becoming used by non-data scientists.

With AutoML, the process of machine learning is shortened so that the user only has to pour in the data. The automated process then allows the input to be interpreted without the programming-heavy middle steps, as is the case with traditional machine learning. Likewise, Low Code or No Code platforms also enable people with limited programming knowledge to develop applications by having point-and-click or other graphical interfaces and model-driven logic. These advances enable AI democratization, which gives experts from different industries (e.g., healthcare, manufacturing, agriculture) the power to use AI for their respective purposes.

The possibility to exploit the vast data with a bigger group of experts can improve today’s healthcare and thus the insurance industry. With the potential to generate more insights in patient care, for instance, predictive care or earlier diagnosis could lower the risks associated with each individual.

12. Knowledge Graphs

Knowledge graphs are used to enhance search results and deliver valuable insights by creating relationships between data from different sources.

AI applications in companies cannot reach their full potential when data is treated in silos and not fused with reasoning. Knowledge graphs are recognized as a new wave of AI that brings together machine learning and graph technologies to overcome these obstacles. The goal is for AI to gain context and to process and store information close to the way a human brain does.

Humans constantly interpret information using background and common-sense knowledge. A knowledge graph similarly represents semantics by describing entities and their relationships in a graph-like structure.

This process is called semantic enrichment and utilizes natural-language processing (NLP). The result is a complex network of information from which missing facts can be inferred far better than from a conventional database alone.

The technology has valuable implications for companies. Prominent use cases are search engines, question-answering services and social networks. But any company faced with disconnected, heterogeneous and ever-growing data sources can use knowledge graphs to avoid data overload and generate big data value. Knowledge graphs facilitate queries and help integrate company data with other internal as well as external data, yielding novel insights.

13. Reinforcement Learning

Reinforcement learning (RL) is an iterative learning process where a software agent faces a game-like situation and employs trial and error to find a solution.

In reinforcement learning, a machine learning model is trained in an interactive environment by trial and error, in which the model is rewarded for being right. Although the designer sets the reward policy, i.e., the rules of the game, the designer doesn’t give the model hints or suggestions on how to solve the problem. Instead, the model uses feedback from its own actions and experiences to improve. Its goal is to maximize the total reward.

The models are designed to solve specific kinds of learning problems. Fields of applications include self-driving cars, industry automation, trading and finance, NLP, healthcare, etc.

Although significant progress has been made in the field, reinforcement learning is still mainly a research area.

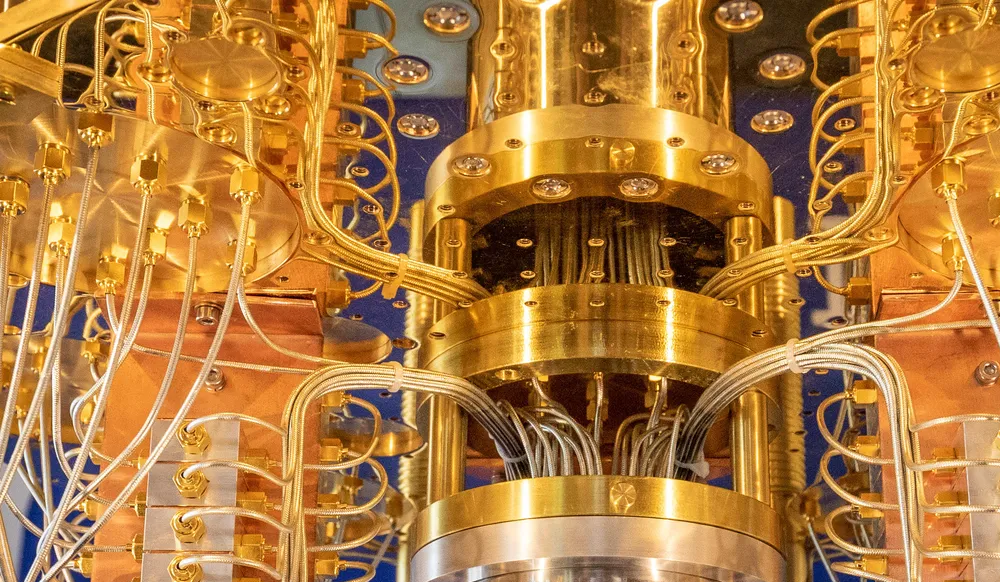

14. Quantum Computing

Quantum computing stands for unprecedented computing power. It could trigger a new wave of technological development over the next five to ten years.

An ordinary computer uses bits, represented by 1 or 0. Double the bits means double the processing power. Quantum computing on the other hand, uses so-called qubits, which can be both 1 and 0 at the same time. Not only that, but a process called “entanglement” also allows some extra qubits to exponentially increase the processing power of a quantum computer.

A quantum computer can solve problems in a fraction of the time it takes conventional computers. They may soon even be able to handle more complex tasks.

Google’s quantum computer, Sycamore, achieved its first real-world success in 2020 when simulating a chemical reaction of a molecule at a speed level of 12 qubits. But China claims to have the fastest quantum computer that is ten billion times faster than Sycamore. China has to date invested $10bn in its National Laboratory for Quantum Information Sciences.

15. Multimodal AI

Multimodal AI combines inputs from different sources of data to achieve more accurate results. Various industries including automotive, consumer devices and healthcare are or could be disrupted by multimodal AI.

The sources of data can vary and include texts, audios and images, amongst others. By analyzing these different types, AI could contextualize the data and thereby achieve better performance than traditional unimodal AI.

For instance, advanced driver assistance systems (ADAS) and human-machine Interface (HMI) assistants are adopting the technology. Consumer devices are observing the use of multimodal AI for security or payment authentication purposes. Healthcare is also exploring this field in terms of diagnosis by incorporating medical images with patients’ electronic health records.

16. Artificial General Intelligence

Expressed concisely and simply: artificial general intelligence (AGI) – also called “strong AI” – doesn’t yet exist, since today’s AI technology cannot be proven to possess the equivalent of human intelligence. It lacks common sense, intelligence and extensive methods for self-maintenance or reproduction. So today, AGI is only a subject for science fiction and “what if” discussions.

Progress on artificial intelligence has so far been limited to so-called “weak AI”, in other words special-purpose AI limited to specific, narrower use cases. Even though it may be possible to build a machine that approximates human cognitive capabilities, we are likely to be decades away from having the necessary research and engineering.

…………………………….

AUTHORS: Martin Thormählen, Chief Technology Officer Munich Re and Daniel Grothues, Head of Enterprise Architecture ERGO